Reports vs Analytics: Technology Deep Dive

Discover how analytics are produced and why transactional solutions can only support reports. This is an introduction to people analytics technology.

This article is the second in a two-part series about the differences between Reports and Analytics. Learn more about the functional differences of each in Reports vs Analytics: What’s the Difference?

In an earlier blog post called Reports vs Analytics: What’s the Difference?, I showed how “reports provide data; analytics provide insight.” The goal here was to introduce you to the idea of how the differences can impact decision-making.

For example, a report on hundreds of employees tells you about hundreds of employees, but an analysis of all this employee data can illustrate the connection between things like new hire training and turnover. With this insight, HR leaders can make the business case for key programs.

Now we are ready to do a deeper dive. This will allow you to understand how analytics are produced and why transactional solutions can only support reports. Let’s take a look under the hood.

Transactional solutions

Transactional systems–such as your HRMS, Learning Management System (LMS), or Accounts Payable system–provide the data for basic reports. These systems store data in tables, chunking it out so that they only need to pull forward exactly what is necessary to support the task at hand, such as creating a new purchase record, updating accounts receivables, or requesting time off.

This means that data related to the same object, such as a purchase order or person, is spread across multiple tables. While this data storage structure is optimal for transactions, it is less than ideal for reporting, and not suited at all for analytics.

Almost all transactional systems do offer reporting of some kind or another, and although the usability and performance varies widely, even the best reporting feature is still limited by the table-based structure behind the system. Another limitation is that the data is isolated at the source, requiring work to bring separate, but related data together to show a bigger picture.

To report on data that spans multiple tables, you need to build queries that join those tables.

Joins are places where the columns of two or more tables are the same, so the rows within the tables can be aligned.

Each join is a drain on performance, and some tables simply can’t be joined due to their structure–there’s simply nowhere that the tables neatly overlap. If you have ever written reports, you know this already, but in case you haven’t, here’s an example:

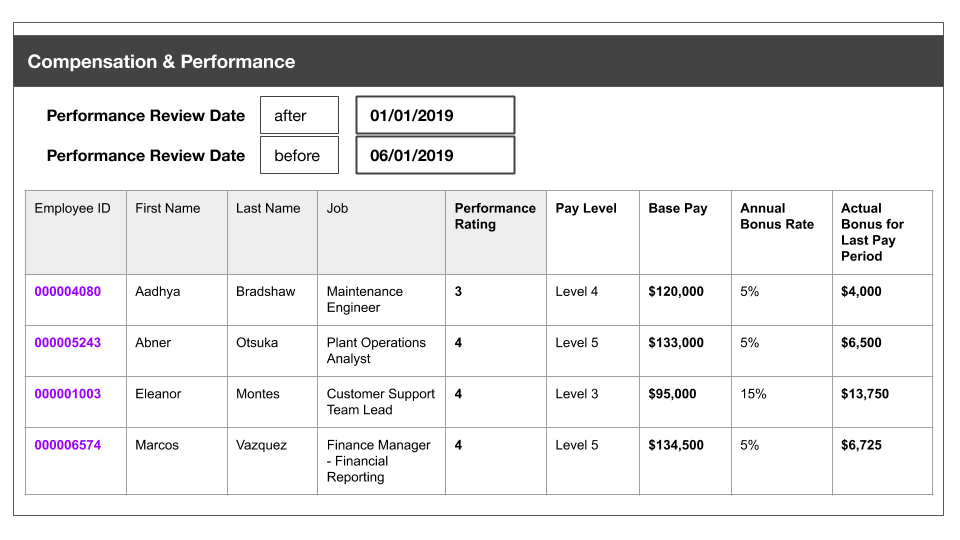

A common report requested by business leaders is one that shows what high performers are earning compared to average or low-performing employees.

An employee’s performance data resides on one record, while their compensation data resides elsewhere, sometimes on multiple records depending on how the employee is compensated. To answer the question of how high-performing employees are being paid compared to their colleagues, data must be pulled from multiple tables, joining on the employee ID.

Examples of tables that are difficult or impossible to join because the structures provide no overlap.

If all of this data resided in our transaction system, we could create a report that looked like this:

example table if all of this data resided in our transaction system

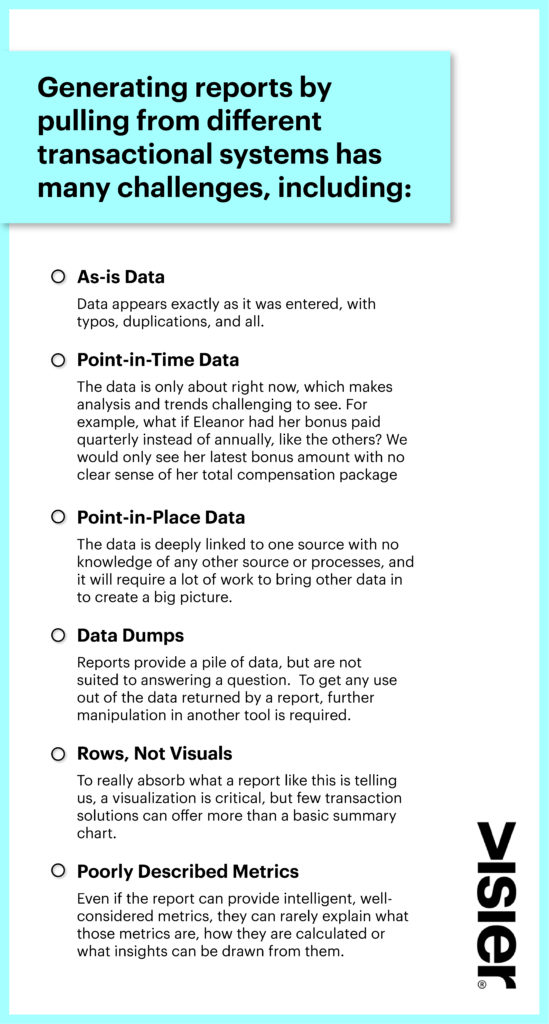

The challenges of transactional system reports

Graphic that shows the different challenges of generating reports from transactional systems

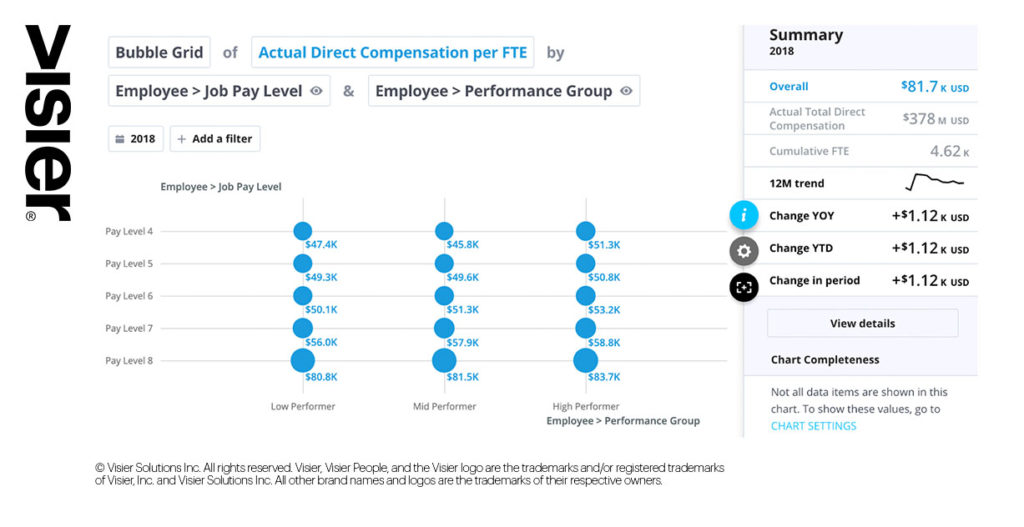

The above limits make it difficult to answer the business leader’s real question, which is: “Are we paying high performers in a way that reflects their value to the organization?” A list of data rows will not provide that answer. At the end of the day, she needs something like this:

The above analysis is far more useful than a table because it has:

Normalized and unified the review data of every employee.

Determined the correct, single performance rating for every person as of the reporting period, regardless of how many performance reviews were conducted or how many jobs a person holds.

Calculated the actual compensation per employee for the period selected, including variable compensation.

Visualized the total compensation, so that anyone can see where there are trends.

Been generated with dynamic filters and controls to allow analytics and business leaders to change the view and retrieve new insight in real time. For example, the business leader can filter results by tenure to explore possible causes for the small gaps between groups at Pay Level 5. The solution would be able to derive Tenure based on the employee start date.

Given users the ability to drill down to the individual people who make up the visual (“Who are our High Performers at Pay Level 8?”)

The capabilities to create the above analysis simply aren’t supported by transactional systems.

So if these systems are not designed to support analytics, then which ones do? How are those solutions different?

Analytic Technology

Unlike transaction reports that just display the data that is there, analytics summarizes information and processes into metrics and measures, leading to insight. To transform into those metrics, measures, and ultimately, insight requires that the data pass through layers of technology. The exact nature of those layers are slightly different depending on the approach that you take and the output that you want.

At a really high level, these layers are:

Data integration

Data storage

Data model

Report writer, management, & visualization

Metadata layer

Security layer

Layers to analytics

Traditionally, the functions of these layers have been performed by different tool sets and configurations that are designed specifically for the organization. When you look at this list of requirements, it’s quite easy to see why a lot of analytics projects that are built in-house take a long time to go live.

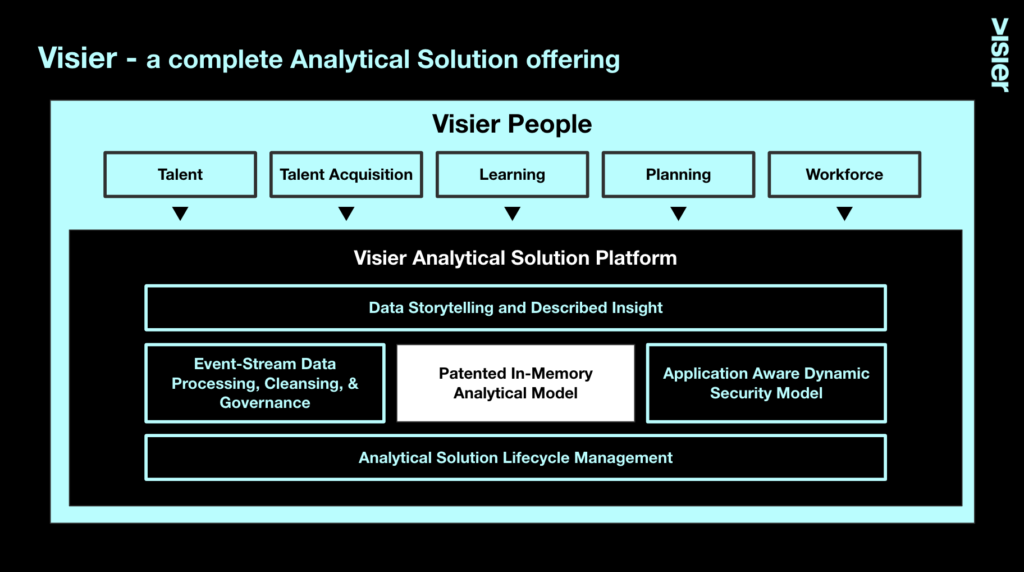

More recently, cloud vendors of data analytics, such as Visier, have found ways to collapse these layers into a single solution that’s purpose-built for people analytics, greatly reducing the time needed to get through the layers and to the insight. We’ll cover what a unified solution looks like after breaking down the layers below:

Data integration

Data analytics projects bring together data from different sources so that it can be queried for insight. For this to work, a few things need to happen:

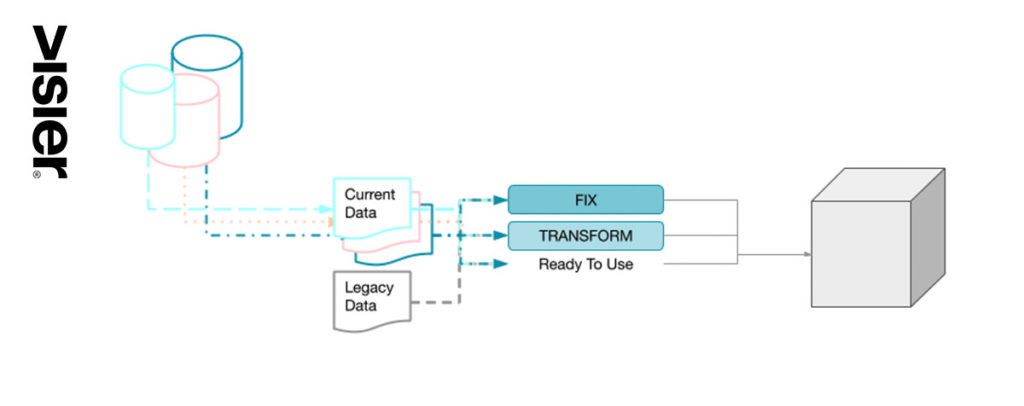

Extraction: The data needs to be pulled from the source where it was created, or where it currently resides. If you’re not sure of the values that you need, there is a risk of pulling the wrong information or in the wrong format. But if you know what’s needed – how the values from your source system will map to your analytics solution – this step is very straightforward.

Cleanup and Transformation: Often collapsed under the single step of transformation, there are actually two things that happen here, data cleanup and data transformation:

Data Cleanup

For a variety of reasons, data can be a mess. People enter the same record twice, person A uses different codes from person B, or people enter nonsense just to save the record. This noise needs to be stripped away so that the data can paint a clear picture. The best and quickest way to clean data is to analyze it in the raw form so that the outliers stand out and can be addressed. The hardest way is to try to comb through the rows manually. This is the surest way to halt your project before you even get started.Data Transformation

Data is frequently stored in different formats in different systems. For example, you could have two performance systems, one that uses a 5-point rating and one that uses 4-points. Or the difference could be as simple as using different abbreviations for locations (CA versus Cali or California). Rather than changing everything at the source, transformation can be used to transform the data as it gets loaded into the data store.

Load: This is the process of loading the data into the data store. This step involves data mapping–mapping the data from the source systems to its new home in the data store. This requires intimate knowledge of the data store structure, and the data being loaded. Without familiarity with the incoming values, the data could end up miscategorized.

Data storage

Wrapped up in this topic is data governance — identifying the good from the bad, the usable from the unusable, and so on. This can require a lot of work, from catalogs and metadata, to strict processes, and more.

Data governance

For a variety of reasons, data can be a mess. People enter the same record twice, person A uses different codes from person B, or people enter nonsense just to save the record. This noise needs to be stripped away so that the data can paint a clear picture. The best and quickest way to clean data is to analyze it in the raw form so that the outliers stand out and can be addressed. The hardest way is to try to comb through the rows manually. This is the surest way to halt your project before you even get started.

Data Transformation

Data is frequently stored in different formats in different systems. For example, you could have two performance systems, one that uses a 5-point rating and one that uses 4-points. Or the difference could be as simple as using different abbreviations for locations (CA versus Cali or California). Rather than changing everything at the source, transformation can be used to transform the data as it gets loaded into the data store.Load: This is the process of loading the data into the data store. This step involves data mapping–mapping the data from the source systems to its new home in the data store. This requires intimate knowledge of the data store structure, and the data being loaded. Without familiarity with the incoming values, the data could end up miscategorized.

is an integrated repository of data from disparate sources.

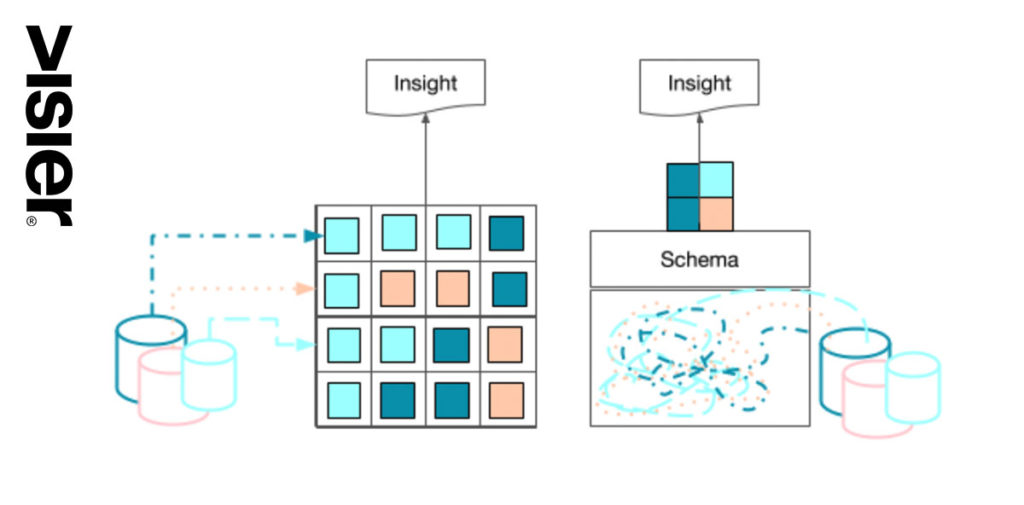

The key word in this definition is integrated. The data isn’t just dropped into the database, it is mapped to a data model that has been built within the data warehouse.

The warehouse owner constructs the model to connect the data as needed in order to facilitate queries down the road. In order to build a data model, you have to know what data you need and how it connects. Data is loaded into the data warehouse and resides in its place within the model.

Data lakes

A data lake is an unstructured repository of data. Data lakes sprung up as an alternative to the data warehouse because they don’t include a data model within the data lake, and this allows the storage of any data, in any format.

This greatly reduces the time spent loading of data and it means that you can store data without having a place for it. You can store all of your data in the data lake and only spend time modeling it when you’re ready to use it.

Graphic showing how a data lake works

Data model

Data models connect data to support analytics. Where we used joins to connect tables in transaction systems, data models allow for multiple connections to multiple data points, but for them to support meaningful inquiry, the connections and the data should be well understood by the person building the model.

Done right, the right pieces of data and metrics connect and data analysts and data scientists can access the information they need and trust that the right values connect to provide meaningful insight.

Data warehouse data model

The data warehouse houses the data model so every piece of data that’s loaded has a place.

Think of it a bit like organizing your garage – you figure out where all of your stuff goes, keeping sports equipment with sports equipment, tools above the toolbench, and so on When you’re done, it looks great and everything is in reach. But this can pose a challenge for maintenance and expansion. What if you buy a new tool? Is there somewhere for it on your toolbench? What if you start camping? Where do you put your camping gear?

Maintaining a data warehouse that can grow and expand with an organization’s analytics needs is a big job that requires constant work and rework. But the end result is a solution that data scientists can query.

Data lake data model

In contrast, the data lake is more like my garage – just toss things in and shut the door. The organization of the data only comes when it’s time to get it out. So until it’s needed, it can just sit wherever.

This saves a lot of time on the front end, but as you can imagine, it imposes a lot of work on the backend to make the data accessible.

It also means that the data used to inform measures like turnover, or total compensation, or revenue, may not be consistent over time if the data isn’t standardized on the way in or if the person accessing it uses a different query.

Another challenge is securing the data in the data lake. Since there’s no organization to the data it’s not possible to delineate what someone should or should not have access to. This means that only a handful of people can extract data and that puts a limit on how often and how many kinds of analytics you can run against a data lake.

Metadata management

Whether the data is stored in a lake or a warehouse, there needs to be some kind of standardization of format, language, spelling, and metrics (calculations involving one or more data points). Without this it would be impossible to write a report that pulled all of the relevant values, or compare values from one analysis to another. It’s important to set a standard for the organization that everyone uses.

Standards can be generic, such as setting a date format or location codes, or they can be domain-specific. An example of a domain-specific metric requiring standardization is voluntary turnover. To measure this accurately, compare numbers over time or across jobs and departments, every report and analysis that uses it must be determining this metric the same way.

This requires standardization, but standardization that’s meaningful to the domain–in this case HR. It’s important that the people doing the meta-data management have a deep understanding of the needs of the business that will be served by the data when setting the standards.

Finally, the metadata management scheme that’s put in place needs to make sense for the users accessing and using the data, as well as those managing and preserving the data.

Report writer, report management, and visualization

When there’s a need for analytic insight into a problem or area of the business, it’s time to write a report that will pull out the information needed. The report writer enables you to extract the exact information from the data store in a specified format. To visualize the data using bar charts, pie charts, or other diagrams, you need a visualization tool on top. Once you have created a report, you can save the specifications and reuse it.

Because data warehouses have structured the data already, they are easier to query with reporting tools than data lakes, although writing reports for either solution remains a specialized skill set, limiting who can successfully leverage the data. Some providers estimate that it takes almost 100 report writers to support about 1000 report consumers.

Security

Security is a factor at every step of this journey, but the rubber really hits the road when we’re talking about creating and securing the resulting analytics. Who has permission to write analytic reports against the data store and extract data? What data can be extracted?

For example, the manager of Location A has the right to see the data of the Location A workers, but not that of the Location B workers, and vice versa for the manager of Location B. In order to allow the managers to directly pull their own information, your security layer would need to ensure that they’re only pulling the data for their own location.

Ideally, there would be security at the data layer that could filter the data itself, but more often it’s the report definition itself that filters the data. Manager A has a report that filters for Location A and Manager B has a report that filters for Location B. You can imagine how this can quickly result in a lot of different analytic reports. The final security element is who can run existing reports. Does the solution authenticate the user or can anyone run a report if they have a link to it?

Securing your organization’s data is critical, so it’s important to consider the downstream effects of all of your earlier decisions so that when you finally are at a point to start creating and sharing analytic reports, you are able to do so securely.

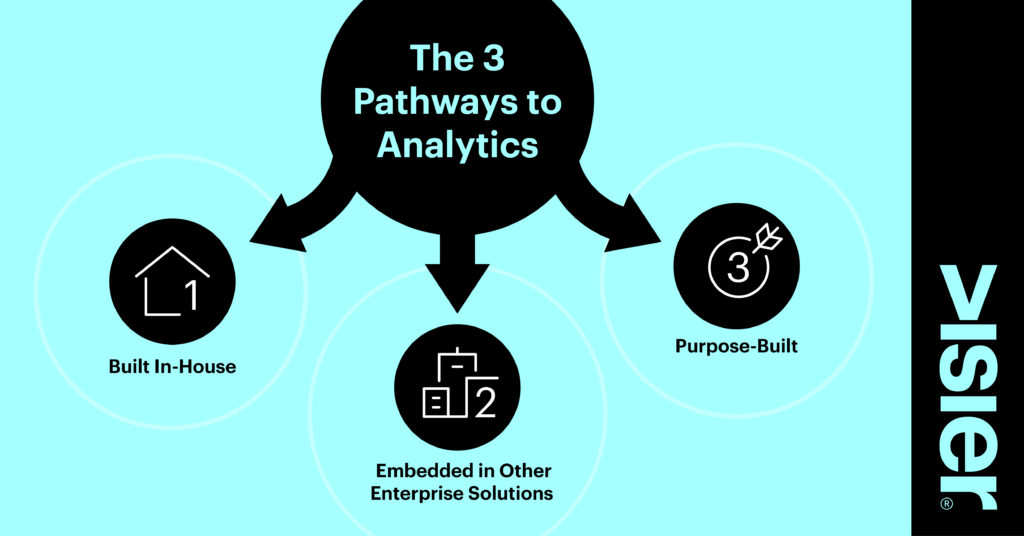

Pathways to analytics

Now that you have an overview of the layers required to create and support analytics, let’s review how you can get analytics in place. This is where time-to-value becomes really crucial: as our Chief Product Officer writes in this blog post, timing is everything when it comes to business transformation.

Companies have to deliver when the market, ecosystem, and customers are ready. This means that, when it comes to delivering analytics as an enabler of business transformation, it’s important to determine which pathway delivers the best time-to-value.

1. Building

The traditional approach to analytics has been to build analytics in-house. Most IT departments have a group devoted to data analytics for the organization and they’ve built out the infrastructure to produce analytic reports for the business.

It is important to note that having and building the infrastructure doesn’t mean that it’s “done.” At the very minimum, even if the analytic infrastructure weren’t going to grow the type and source of data it supports, it still requires constant maintenance. Furthermore, the needs of the business are always changing. The ideal analytics last year, even last month, may no longer provide the right insight.

But moreover, the addition of a new business domain such as HR requires significant effort. If we think back to our closet analogy from before, if your IT department has a well-functioning closet that stores camping gear, sports equipment, and tools, it does not mean in any way that it’s ready to store your gardening supplies.

New domains require new data models, new metadata schemas, and new security considerations, as well as new analytic reports, visualizations, and a way to get the reports into the hands of the users.

This means a lot of work for IT, and for them to create an analytic solution that is meaningful to the new business domain, it requires expertise in the business and the data of the business as well.

2. Embedding

It is a testament to just how significant analytics has become that all of the big enterprise vendors are offering “embedded” analytic solutions, sometimes even going so far as to offer the products for free.

It certainly gives the appearance of a seamless solution, but the technology that supports the enterprise’s transactions systems is fundamentally different from the technology that supports and produces analytics.

The enterprise vendors have acquired a set of analytic tools and have bundled them as an offering to go with their transaction solutions. However, the need for ETL, data modeling, report writing, and so on is still necessary, which means that the expertise and time is also still needed. Many vendors do offer consulting services to get you started with analytic reporting using their toolsets if you don’t have the staff or expertise in-house, however.

3. Purpose-Built Renting

The final pathway to analytics is via complete, purpose-built, cloud analytical solutions. These solutions are characterized by a few things:

Hosted

Complete

Purpose-built

Hosted

The solution is hosted and maintained by the vendor. This means that the work for building, maintaining, updating, and so on resides with the vendor. It also means that your data needs to be uploaded to the vendor’s cloud, but this is not usually an onerous task to get set up and can be automated. The benefits of not having to assume the ownership of the other tasks is valuable. If security is a concern, good vendors will be able to describe their security processes and certifications.

Complete

Most hosted analytic solutions on the market offer most or all of the technical layers required to support analytics. Some start at the very beginning with ETL, followed by data storage and organization, a metadata schema, and finally, reports and visualizations. Almost all will offer user security, although it’s important to get a good understanding of how each vendor supports it.

Many have developed their own technological advancements to improve the performance and functionality over the layers available to do-it-yourselfers. While some IT analytics professionals don’t like the idea of putting control of the analytic process into the hands of a vendor, there is a lot of time and expensive effort to be saved by doing so.

Purpose-Built

Purpose-built refers to what kind of analytics the solution was built to serve. In order to provide a data model and pre-written analytic reports and visualizations, the solution needs to have a well-defined business domain, or subset of a domain, that it serves.

Solutions that solve for the analytic needs of a business domain are able to address some of the issues that often stall or derail in-house projects. Here are three elements that make this possible:

Metadata schema: The metadata schemas are pre-defined to suit the domain. For example, in a People Analytics solution, not only is Voluntary Turnover defined, it’s defined according to HR best practice.

Defined analytics: More than 80% of any business domain’s questions are the same. A domain-specific solution has identified those questions and is prepared to answer them, saving a huge amount of time for report writers. There will always be unique questions for any organization, but rather than either not being able to get to them at all or answering them at the expense of all the other questions, in-house report writers can leverage a purpose-built solution for the 80% and focus their time on the 20%.

Identified data: Along with saving time writing reports, time is also saved by having a complete inventory of the data needed to answer the identified questions.

A purpose-built, rented solution need not replace an in-house or embedded solution.

It can live within that infrastructure, ingesting and transforming data and sharing data and insight. It can either take over an entire domain so that the in-house resources can focus elsewhere, or serve as the business-users analytic solution, freeing the data scientists and analysts to focus their efforts on doing actual analysis and creating bespoke analytics where needed.

This is an important consideration for organizations that are concerned about gaining the right insights more quickly so they can make good decisions–and start seeing results.